Event

GamesBeat Next 2023

Join the GamesBeat community in San Francisco this October 24-25. You’ll hear from the brightest minds within the gaming industry on latest developments and their take on the future of gaming.

Learn More

t know that, and not that you asked me about Meta specifically, it was Metaverse the topic, but even Meta, who was one of the biggest proponents of a lot of the Metaverse and immersive user experiences seems to be more tempered in how long that’s going to take. Not an if, but a when, and then adjusting some of their investments to be probably more longer term and less kind of that step function, logarithmic exponential growth that maybe –

VentureBeat: I think some of the conversation here around digital twins seems to touch on the notion that maybe the enterprise metaverse is really more like something practical that’s coming.

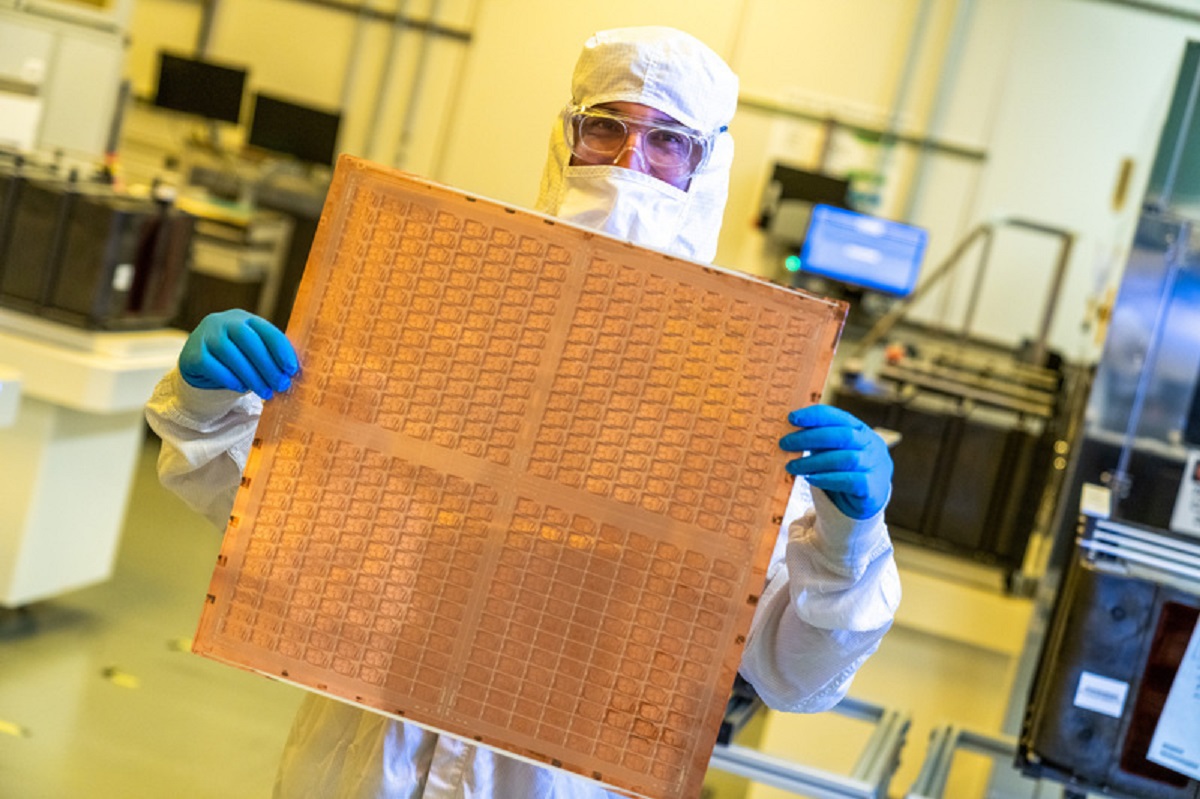

Rivera: That’s an excellent point because even in our own factories, we actually do use headsets to do a lot of the diagnostics around these extraordinarily expensive semiconductor manufacturing process tools, of which there are literally dozens in the world. It’s not like hundreds or thousands. The level of expertise and the troubleshooting and the diagnostics, again, there’s, relatively speaking, few people that are deep in it. The training, the sharing of information, the diagnostics around getting those machines to operate and even greater efficiency, whether that is amongst just the Intel experts or even with the vendors, I do see that as a very real application that we are actually using today. We’re finding a wonderful level of efficiency and productivity where you’re not having to fly these experts around the world. You’re actually able to share in real time a lot of that insight and expertise.

I think that’s a very real application. I think there’s certainly applications in, as you mentioned, media and entertainment. Also, I think in the medical field, there’s another very top of mind vertical that you would say, well, yeah, there should be a lot more opportunity there as well. Over the arc of technology transitions and transformations, I do believe that it’s going to be a driver of more compute both in the client devices including PCs, but headsets and other bespoke devices on the infrastructure side.

VentureBeat: More general one, how do you think Intel can grab some of that AI mojo back from Nvidia?

Rivera: Yeah. I think that there’s a lot of opportunity to be an alternative to the market leader, and there’s a lot of opportunity to educate in terms of our narrative that AI does not equal just large language models, does not equal just GPUs. We are seeing, and I think Pat did talk about it in our last earnings call, that even the CPU’s role in an AI workflow is something that we do believe is giving us tailwind in fourth-gen Zen, particularly because we have the integrated AI acceleration through the AMX, the advanced matrix extensions that we built into that product. Every AI workflow needs some level of data management, data processing, data filtering and cleaning before you train the model. That’s typically the domain of a CPU and not just a CPU, the Xeon CPU. Even Nvidia shows fourth-gen Zen to be part of that platform.

We do see a tailwind in just the role that the CPU plays in that front end pre-processing and data management role. The other thing that we have certainly learned in a lot of the work that we’ve done with hugging face as well as other ecosystem partners, is that there is a sweet spot of opportunity in the small to medium sized models, both for training and of course, for inference. That sweet spot seems to be anything that’s 10 billion parameters and less, and a lot of the models that we’ve been running that are popular, LLaMa 2, GPT-J, BLOOM, BLOOMZ, they’re all in that 7 billion parameter range. We’ve shown that Xeon is performing actually quite well from a raw performance perspective, but from a price performance perspective, even better, because the market leader charges so much for what they want for their GPU. Not everything needs a GPU and the CPU is actually well positioned for, again, some of those small to medium-sized models.

Then certainly when you get to the larger models, the more complex, the multimodality, we are showing up quite well both with Gaudi2, but also, we also have a GPU. Truthfully, Dean, we’re not going to go full frontal. We’re going to take on the market leader and somehow impact their share in tens or percentage of points at a time. When you’re the underdog and when you have a different value proposition about being open, investing in the ecosystem, contributing to so many of the open source and open standards projects over many years, when we have a demonstrated track record of investing in ecosystems, lowering barriers to entry, accelerating the rate of innovation by having more market participation, we justI believe that embracing openness in the long-term always leads to success. Our customers are actively seeking the best alternative, and we have a diverse range of hardware products that cater to various AI workloads across different architectures. We are heavily investing in software development to simplify the deployment process and increase productivity. This is what developers truly care about.

One common concern is the CUDA moat, which can be challenging to overcome. However, the majority of AI application development occurs at the framework level and above. In fact, 80% of it takes place in these areas. By integrating our software extensions with popular frameworks like TensorFlow, PyTorch, Triton, Jax, OpenXLA, or Mojo, developers don’t need to worry about the underlying technologies like oneAPI or CUDA. They simply want an easy-to-use and deployable abstracted software layer. This is an evolving aspect of our industry.

Now, let’s talk about Numenta’s recent collaboration with Intel. They have spent 20 years studying the brain and have finally introduced software that is hitting the market. They claim that their software can accelerate AI processing by 10 to 100 times, and interestingly, they are utilizing CPUs instead of GPUs. They believe that the flexibility of CPUs outweighs the repetitive processing power of GPUs for their specific needs. This approach not only has the potential to dramatically lower costs but also enables AI to be implemented in more diverse environments.

When it comes to our market position, we have excelled in pre-processing, data management, and the inference and deployment phase of AI workflows. Traditionally, two-thirds of this market has relied on CPUs, especially the latest generation CPUs. The edge inference segment is experiencing the fastest growth in the AI market, estimated to increase by 40% over the next five years. With our highly programmable and widely available CPUs, we are well-positioned to capitalize on this trend.

However, we don’t believe in a one-size-fits-all approach. The market and technology are evolving rapidly, and we are prepared with a comprehensive range of architectures, including scalar, vector processing, matrix multiply, spatial architectures with FPGAs, and an IPU portfolio. Our focus now is on investing more in software development and reducing barriers to entry. Our DevCloud, for example, aligns perfectly with this strategy by providing developers with a sandbox environment to experiment and innovate. We want to make it easy for them to try new ideas and accelerate innovation. This is a key differentiating factor between Intel and the leading GPU market leader.

Moving on to brain-like architectures, they do show promise. Numenta argues that the brain operates on low energy, and replicating this efficiency should be the ultimate goal. However, there is still uncertainty about whether we can fully duplicate the brain’s capabilities. Nevertheless, what was once considered impossible is now becoming possible. The AI landscape is constantly evolving, and our role is to provide accessible tools and technologies that empower innovators. We understand that accessibility means affordability and ease of use.Access to compute has always been about driving down cost and increasing accessibility. The easier it is to deploy, the more utilization, creativity, and innovation it brings. This was evident in the days of virtualization, where making assets more accessible and economical led to more innovation and demand. Economics plays a significant role in driving progress, as seen in Moore’s Law and Intel’s commitment to affordability, accessibility, and investment in the ecosystem.

When it comes to low power and cost constraints, we are continuously working to improve power efficiency and cost curves while increasing compute capabilities. For example, our recent announcement about Sierra Forest, doubling the number of cores to 288, showcases our commitment to making computing more affordable, economical, and power-efficient. This is a crucial aspect, especially in regions like China and Europe, where customers are driving us towards these goals.

In a competitive environment where AI is being promoted on smartphones, we believe that the PC will remain a critical productivity tool in the enterprise. While smartphones are ubiquitous, the PC offers unique advantages, and both devices can coexist. AI will be infused into every computing platform, including the PC, Edge, and cloud infrastructure. Despite the impact of COVID and the temporary surge in multi-device usage, we don’t see a decline in the relevance of PCs. They continue to be essential tools for productivity alongside smartphones.

Infusing more AI into the PC is not just a trend but a necessity going forward. We are leading the way in this area, and we are excited about the countless use cases that will be unlocked by integrating more processing power into the platform.

Regarding gaming and AI, there have been challenges in implementing large language models into game characters due to the high costs involved. However, we understand the need to find a balance between cost and functionality. As we continue to develop AI technologies, we aim to make them more cost-effective and efficient, ensuring that AI doesn’t become just a bunch of data to search through but remains generative and intelligent.

The concept of gathering and searching through personal data, such as voice calls, can be overwhelming. However, the real value lies in AI’s ability to produce meaningful insights and actions from that data. The ability to sort through and search data is just the beginning, and the true payoff comes from AI’s capability to generate intelligent outcomes.

In summary, we are committed to driving down costs, increasing accessibility, and improving power efficiency in computing. The PC remains a vital tool in the enterprise, and AI will continue to be integrated into every computing platform. We are excited about the possibilities that AI brings and are focused on delivering innovative solutions that unlock its full potential.There’s a lot of exciting algorithmic innovation happening in the field of AI. Researchers are finding ways to achieve the same level of accuracy with models that are much smaller and more cost-effective to train. Gone are the days when it took millions of dollars, months of training, and massive amounts of power to develop a model. Now, we’re seeing advancements in quantization, knowledge distillation, and pruning techniques. Just look at the success of LlaMA and LlaMA 2, which have proven that we can achieve high accuracy at a fraction of the cost in terms of compute and power.

In terms of economics and use cases, foundational models are like weather models. There may be only a few developers creating them, but there are countless users who benefit from their predictions. The key is to fine-tune these models to fit specific contexts and datasets. For example, an enterprise dataset will have its own linguistics and terminology, which means it requires a smaller model and less compute power. This makes AI more affordable and accessible, whether it’s deployed within an enterprise or on a client device.

When it comes to AI intelligence, it’s not solely dependent on the amount of data. Even a PC with a neural processing engine or a CPU can handle the processing requirements of smaller datasets. The compute power needed is well within reach of these devices.

At GamesBeat, we believe in the intersection of passion and business in the gaming industry. Our goal is to provide news and insights that matter to both decision-makers in game studios and passionate gamers. Whether you’re reading our articles, listening to our podcasts, or watching our videos, GamesBeat is here to help you stay informed and engaged with the industry. Join us and discover our Briefings.