Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

Large language models like ChatGPT and Llama-2 are notorious for their extensive memory and computational demands, making them costly to run. Trimming even a small fraction of their size can lead to significant cost reductions.

To address this issue, researchers at ETH Zurich have unveiled a revised version of the transformer, the deep learning architecture underlying language models. The new design reduces the size of the transformer considerably while preserving accuracy and increasing inference speed, making it a promising architecture for more efficient language models.

Transformer blocks

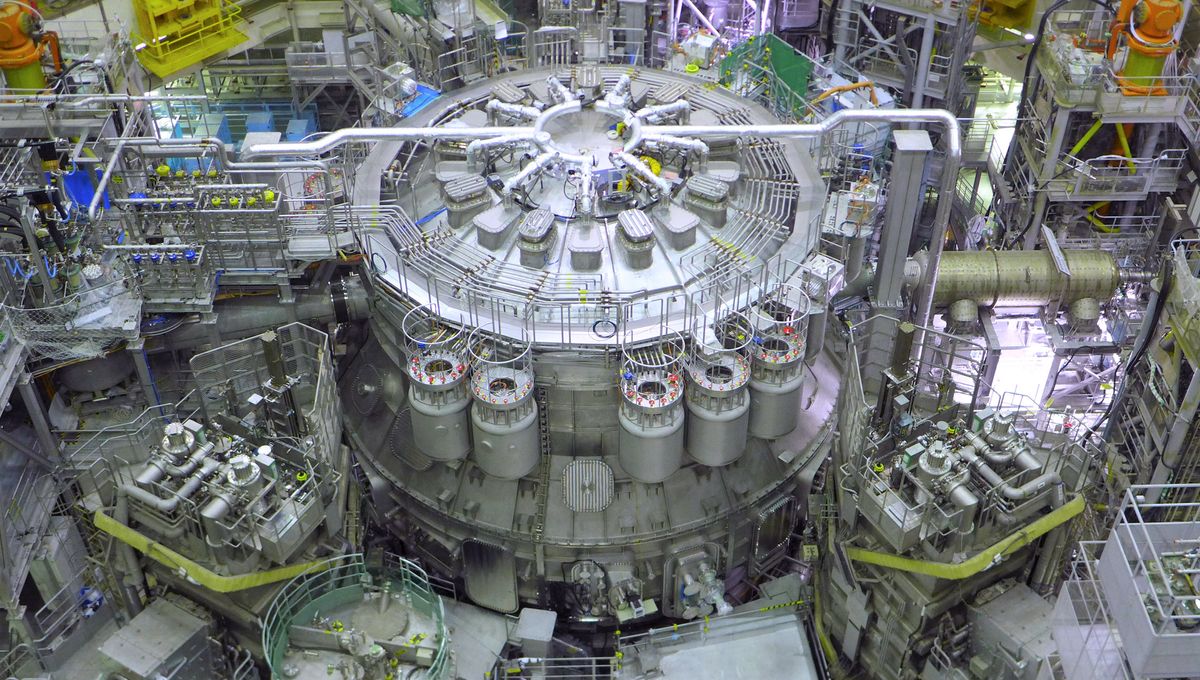

Language models operate on a foundation of transformer blocks, uniform units adept at parsing sequential data, such as text passages.

The transformer block specializes in processing sequential data, such as a passage of text. Within each block, there are two key sub-blocks: the “attention mechanism” and the multi-layer perceptron (MLP). The attention mechanism acts like a highlighter, selectively focusing on different parts of the input data (like words in a sentence) to capture their context and importance relative to each other. This helps the model determine how the words in a sentence relate, even if they are far apart.

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!